2025

ROME is Forged in Adversity: Robust Distilled Datasets via Information Bottleneck

Zheng Zhou, Wenquan Feng, Qiaosheng Zhang, Shuchang Lyu, Qi Zhao, Guangliang Cheng

International Conference on Machine Learning (ICML) 2025 Poster (Acceptance Rate: 26.9%, 3,260/12,107)

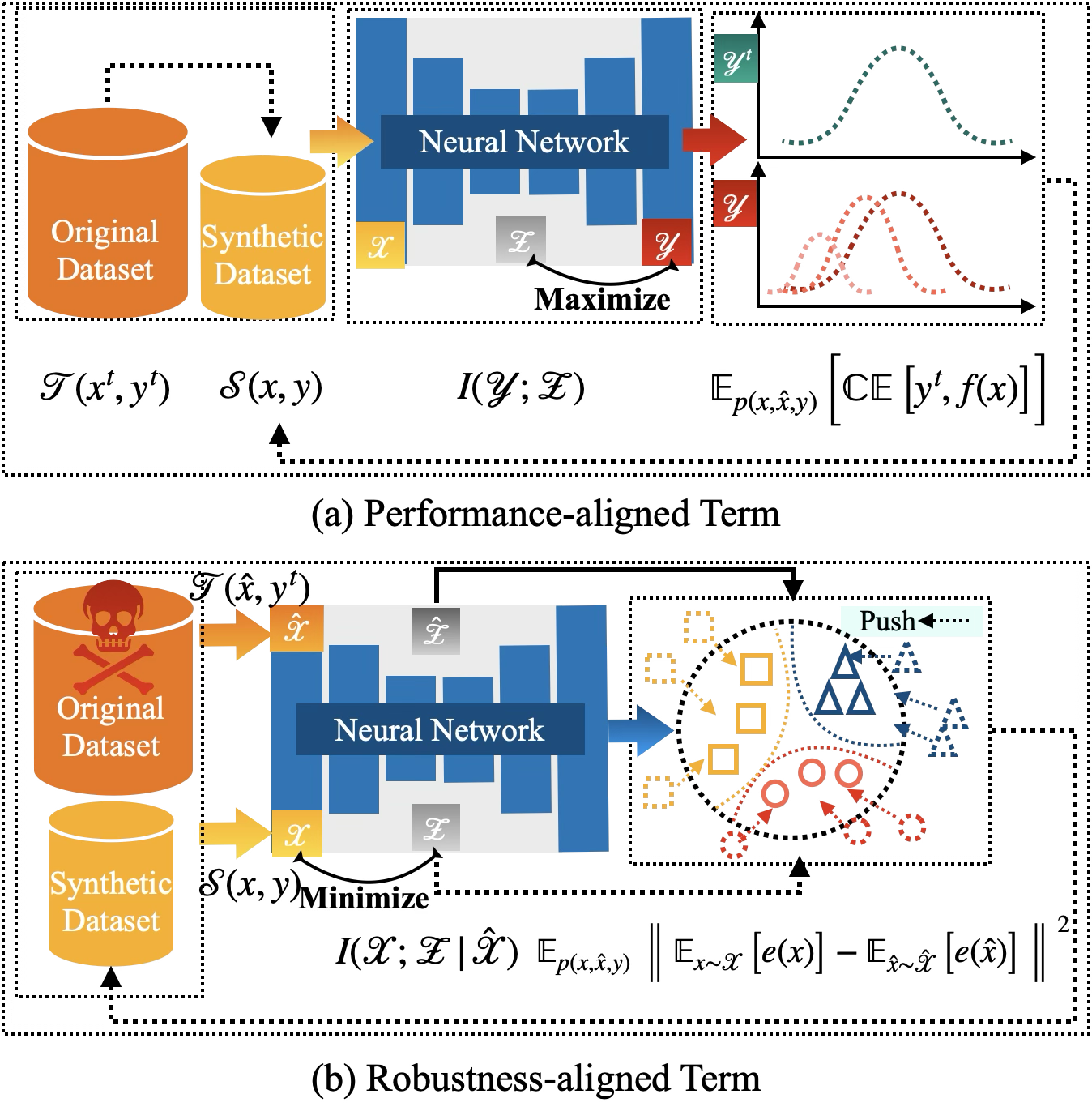

We introduce ROME, a method that enhances the adversarial robustness of dataset distillation by leveraging the information bottleneck principle, leading to significant improvements in robustness against both white-box and black-box attacks.

[Project Page] [Code] [Paper] [量子位 | QbitAI] [中国自动化学会 | CAA Official]

ROME is Forged in Adversity: Robust Distilled Datasets via Information Bottleneck

Zheng Zhou, Wenquan Feng, Qiaosheng Zhang, Shuchang Lyu, Qi Zhao, Guangliang Cheng

International Conference on Machine Learning (ICML) 2025 Poster (Acceptance Rate: 26.9%, 3,260/12,107)

We introduce ROME, a method that enhances the adversarial robustness of dataset distillation by leveraging the information bottleneck principle, leading to significant improvements in robustness against both white-box and black-box attacks.

[Project Page] [Code] [Paper] [量子位 | QbitAI] [中国自动化学会 | CAA Official]

2024

BEARD: Benchmarking the Adversarial Robustness for Dataset Distillation

Zheng Zhou, Wenquan Feng, Shuchang Lyu, Guangliang Cheng, Xiaowei Huang, Qi Zhao

Under review. 2024

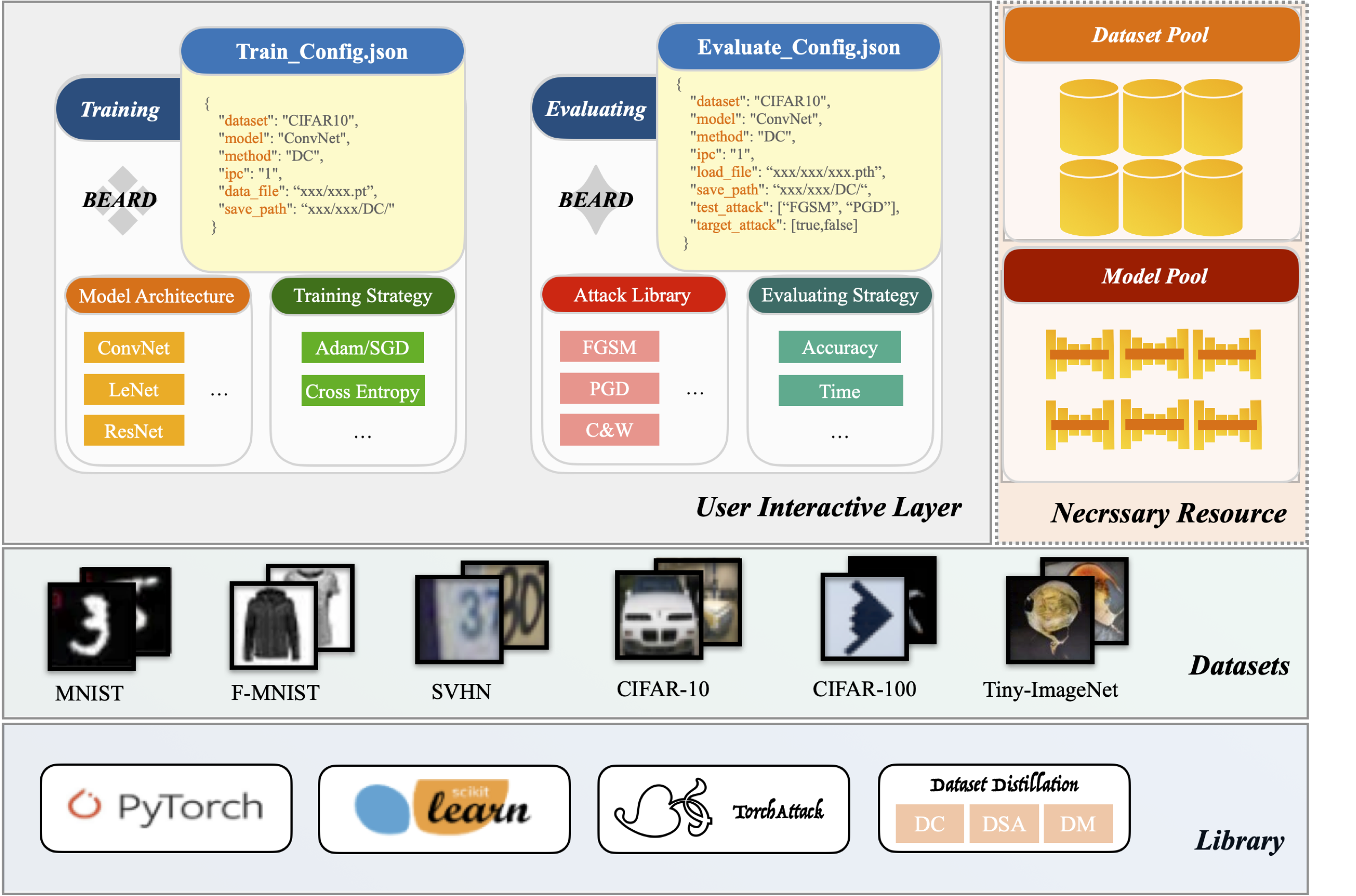

BEARD is a unified benchmark for evaluating the adversarial robustness of dataset distillation methods, providing standardized metrics and tools to support reproducible research.

BEARD: Benchmarking the Adversarial Robustness for Dataset Distillation

Zheng Zhou, Wenquan Feng, Shuchang Lyu, Guangliang Cheng, Xiaowei Huang, Qi Zhao

Under review. 2024

BEARD is a unified benchmark for evaluating the adversarial robustness of dataset distillation methods, providing standardized metrics and tools to support reproducible research.

BACON: Bayesian Optimal Condensation Framework for Dataset Distillation

Zheng Zhou, Hongbo Zhao, Guangliang Cheng, Xiangtai Li, Shuchang Lyu, Wenquan Feng, Qi Zhao

Under review. 2024

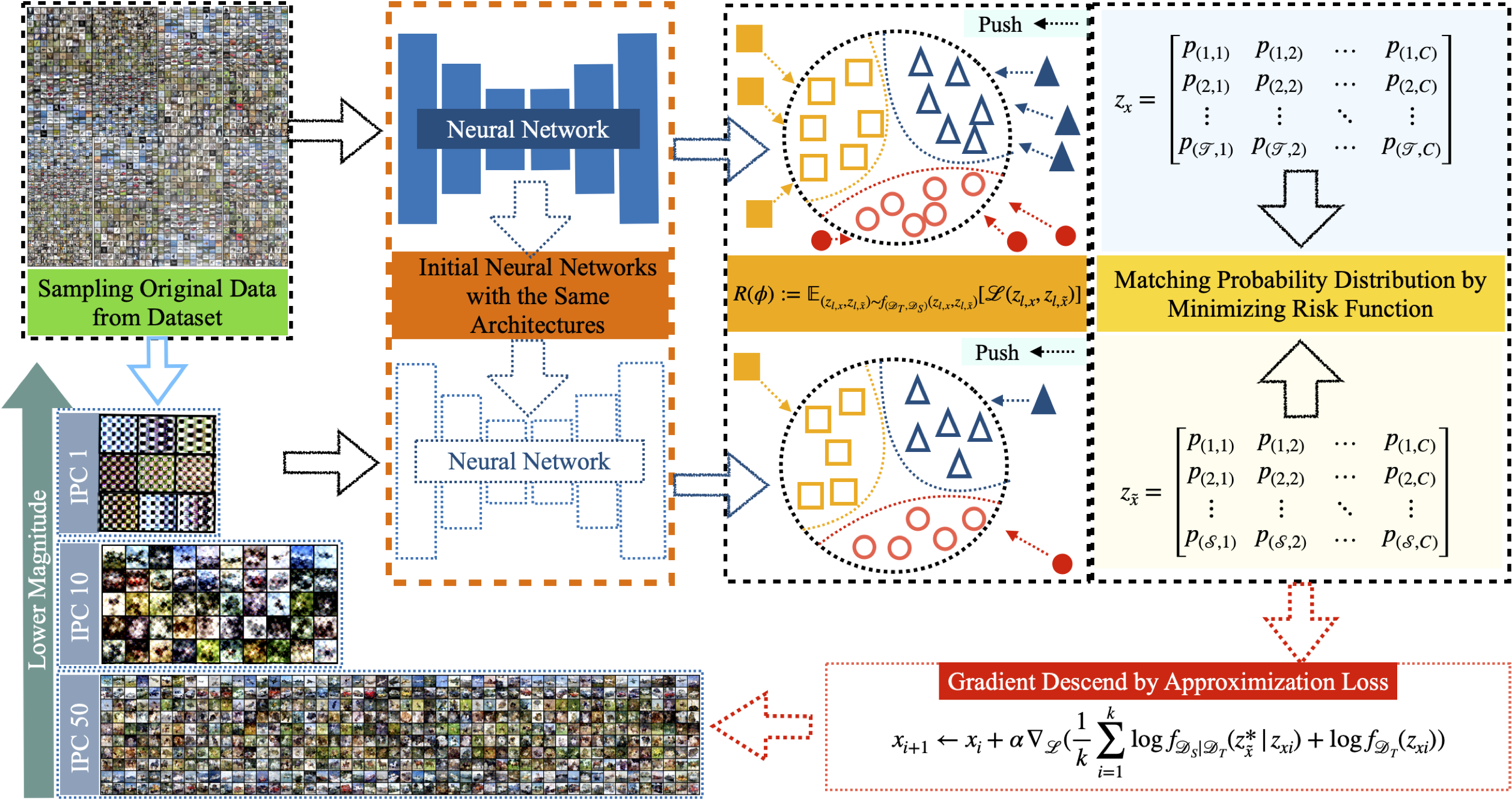

This work presents BACON, the first Bayesian framework for Dataset Distillation, offering strong theoretical support to improve performance.

BACON: Bayesian Optimal Condensation Framework for Dataset Distillation

Zheng Zhou, Hongbo Zhao, Guangliang Cheng, Xiangtai Li, Shuchang Lyu, Wenquan Feng, Qi Zhao

Under review. 2024

This work presents BACON, the first Bayesian framework for Dataset Distillation, offering strong theoretical support to improve performance.

2023

MVPatch: More Vivid Patch for Adversarial Camouflaged Attacks on Object Detectors in the Physical World

Zheng Zhou, Hongbo Zhao, Ju Liu, Qiaosheng Zhang, Liwei Geng, Shuchang Lyu, Wenquan Feng

Under review. 2023

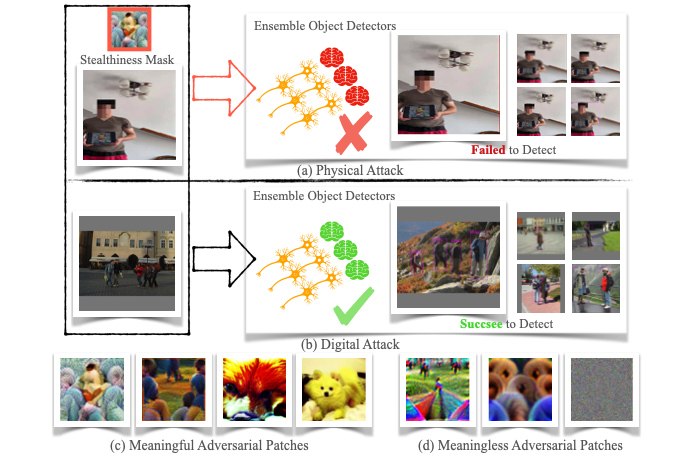

This work proposes MVPatch, an adversarial patch designed to be both highly transferable across detectors and visually stealthy.

MVPatch: More Vivid Patch for Adversarial Camouflaged Attacks on Object Detectors in the Physical World

Zheng Zhou, Hongbo Zhao, Ju Liu, Qiaosheng Zhang, Liwei Geng, Shuchang Lyu, Wenquan Feng

Under review. 2023

This work proposes MVPatch, an adversarial patch designed to be both highly transferable across detectors and visually stealthy.

2022

Adversarial Examples Are Closely Relevant to Neural Network Models - A Preliminary Experiment Explore

Zheng Zhou, Ju Liu, Yanyang Han

International Conference on Swarm Intelligence (ICSI) 2022

This study explores how adversarial examples impact neural networks by analyzing their sensitivity to different architectures, activation functions, and loss functions.

Adversarial Examples Are Closely Relevant to Neural Network Models - A Preliminary Experiment Explore

Zheng Zhou, Ju Liu, Yanyang Han

International Conference on Swarm Intelligence (ICSI) 2022

This study explores how adversarial examples impact neural networks by analyzing their sensitivity to different architectures, activation functions, and loss functions.