Ph.D. Candidate @ BUAA

Ph.D. Candidate @ BUAA Former Embedded Software Engineer @ Haier

Former Embedded Software Engineer @ HaierI am a Ph.D. student at Beihang University. From 2018 to 2023, I worked as an Embedded Software Engineer at Haier Group while completing a Master's degree at Shandong University, supervised by Prof. Ju Liu. I previously studied at TU Ilmenau in Germany and hold a Bachelor's degree from Qingdao University of Science and Technology.

My research focuses on enhancing the reliability and efficiency of machine learning, with particular emphasis on enhancing model robustness against adversarial examples and improving training efficiency through techniques such as dataset distillation.

I am currently seeking internship opportunities and research collaborations. Please feel free to contact me via email.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Beihang UniversitySchool of Electronic and Information Engineering

Beihang UniversitySchool of Electronic and Information Engineering

Ph.D. Candidate in Electronic EngineeringSep. 2023 - present -

Shandong UniversityM.Eng. in Electronic EngineeringSep. 2020 - Jun. 2023

Shandong UniversityM.Eng. in Electronic EngineeringSep. 2020 - Jun. 2023 -

TU IlmenauVisiting Student in Electronic EngineeringOct. 2016 - Oct. 2018

TU IlmenauVisiting Student in Electronic EngineeringOct. 2016 - Oct. 2018 -

Qingdao University of Science and TechnologyB.Eng. in Mechanical Engineering and AutomationSep. 2012 - Jun. 2016

Qingdao University of Science and TechnologyB.Eng. in Mechanical Engineering and AutomationSep. 2012 - Jun. 2016

Experience

-

Haier GroupEmbedded Software EngineerOct. 2018 - Jun. 2023

Haier GroupEmbedded Software EngineerOct. 2018 - Jun. 2023

Honors & Awards

-

Top Reviewer at NeruIPS 2024Sep. 2023

-

Silver Award at ASCEND Competition for Re-IDOct. 2022

-

Oral Presentation at International Conference on Swarm Intelligence (ICSI 2022)Sep. 2022

News

Services

Conference Reviewer

Journal Reviewer

Academic Talks

Selected Publications (view all )

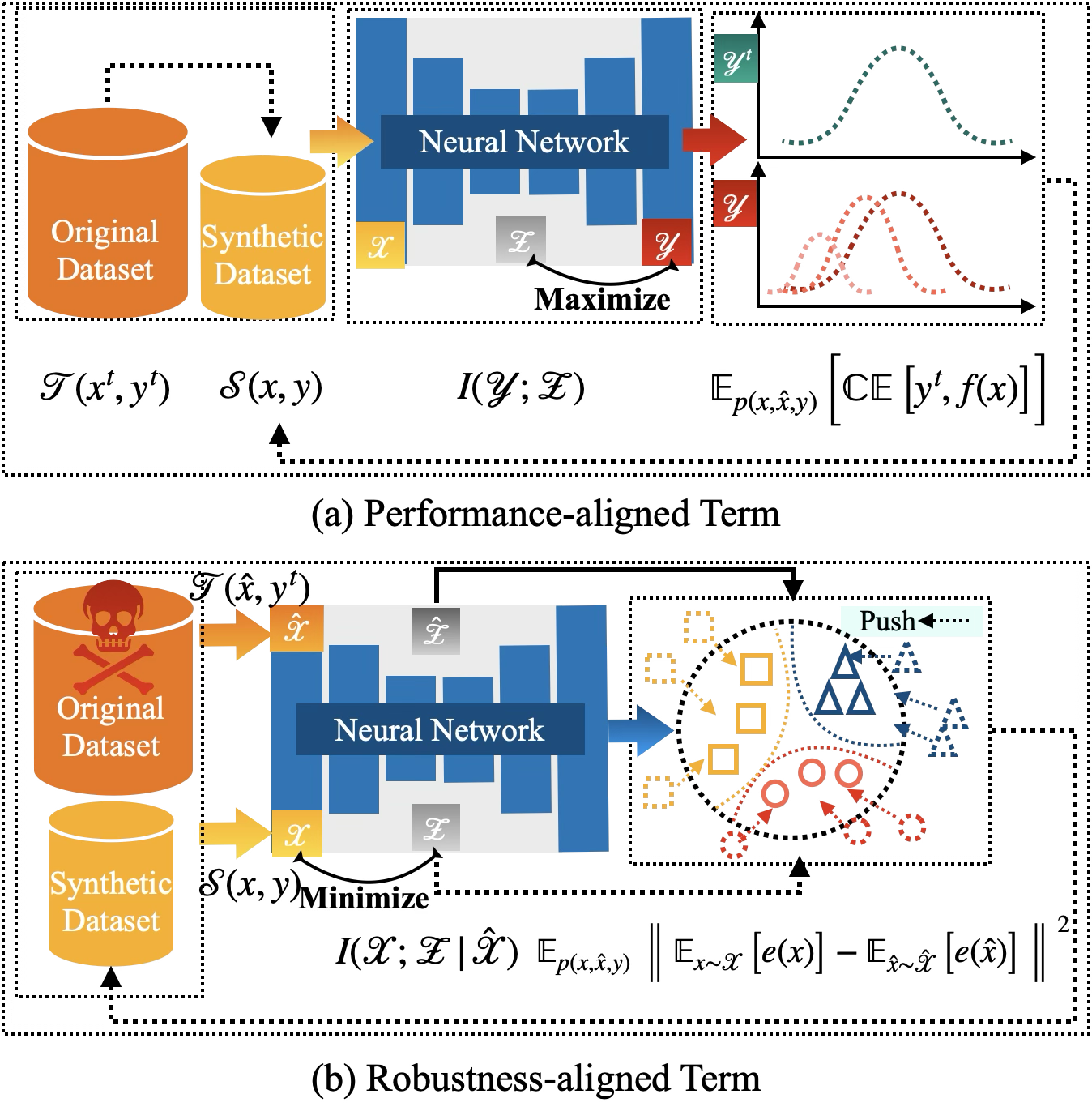

ROME is Forged in Adversity: Robust Distilled Datasets via Information Bottleneck

Zheng Zhou, Wenquan Feng, Qiaosheng Zhang, Shuchang Lyu, Qi Zhao, Guangliang Cheng

International Conference on Machine Learning (ICML) 2025 Poster (Acceptance Rate: 26.9%, 3,260/12,107)

We introduce ROME, a method that enhances the adversarial robustness of dataset distillation by leveraging the information bottleneck principle, leading to significant improvements in robustness against both white-box and black-box attacks.

ROME is Forged in Adversity: Robust Distilled Datasets via Information Bottleneck

Zheng Zhou, Wenquan Feng, Qiaosheng Zhang, Shuchang Lyu, Qi Zhao, Guangliang Cheng

International Conference on Machine Learning (ICML) 2025 Poster (Acceptance Rate: 26.9%, 3,260/12,107)

We introduce ROME, a method that enhances the adversarial robustness of dataset distillation by leveraging the information bottleneck principle, leading to significant improvements in robustness against both white-box and black-box attacks.

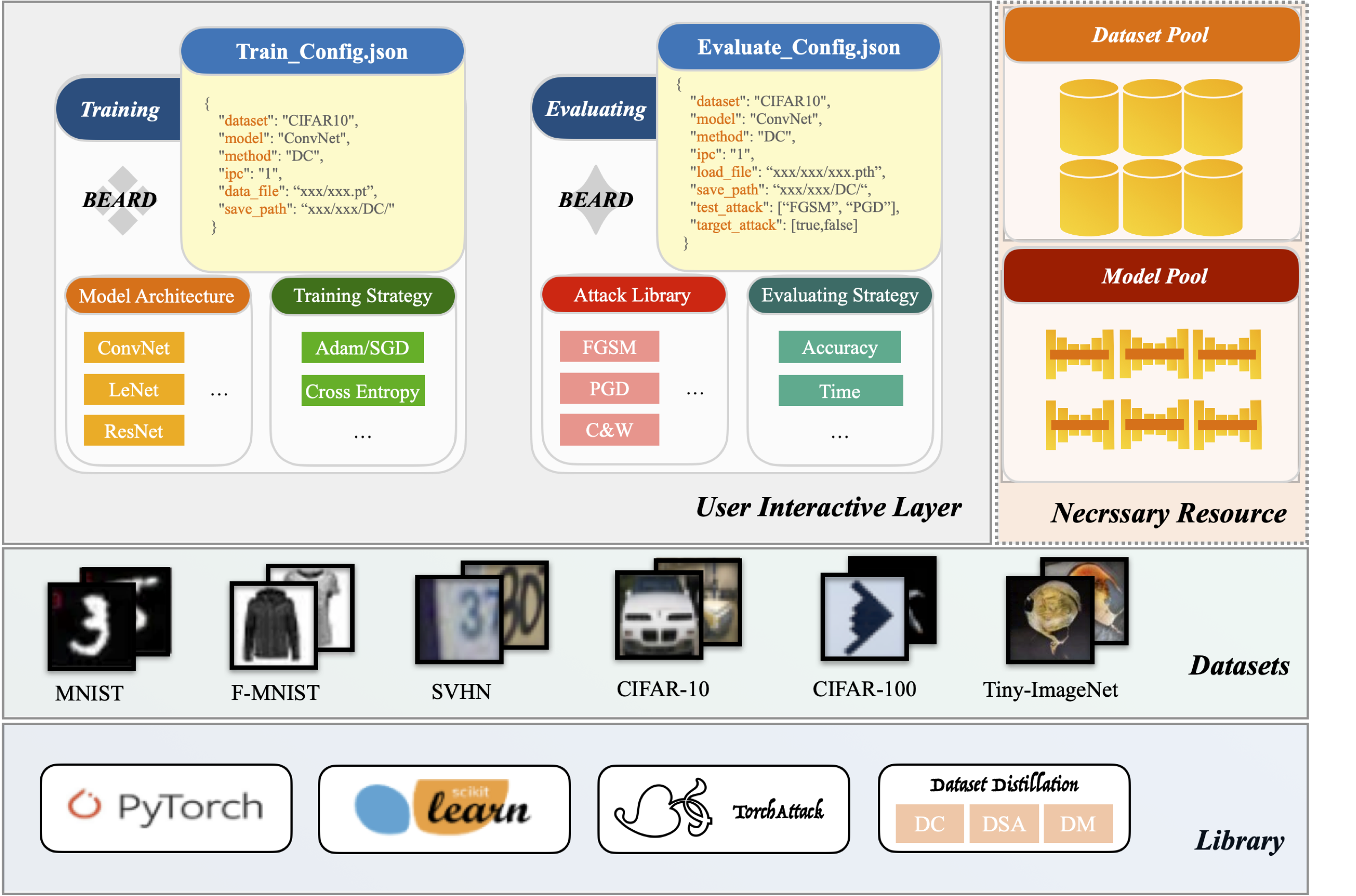

BEARD: Benchmarking the Adversarial Robustness for Dataset Distillation

Zheng Zhou, Wenquan Feng, Shuchang Lyu, Guangliang Cheng, Xiaowei Huang, Qi Zhao

Under review. 2024

BEARD is a unified benchmark for evaluating the adversarial robustness of dataset distillation methods, providing standardized metrics and tools to support reproducible research.

BEARD: Benchmarking the Adversarial Robustness for Dataset Distillation

Zheng Zhou, Wenquan Feng, Shuchang Lyu, Guangliang Cheng, Xiaowei Huang, Qi Zhao

Under review. 2024

BEARD is a unified benchmark for evaluating the adversarial robustness of dataset distillation methods, providing standardized metrics and tools to support reproducible research.

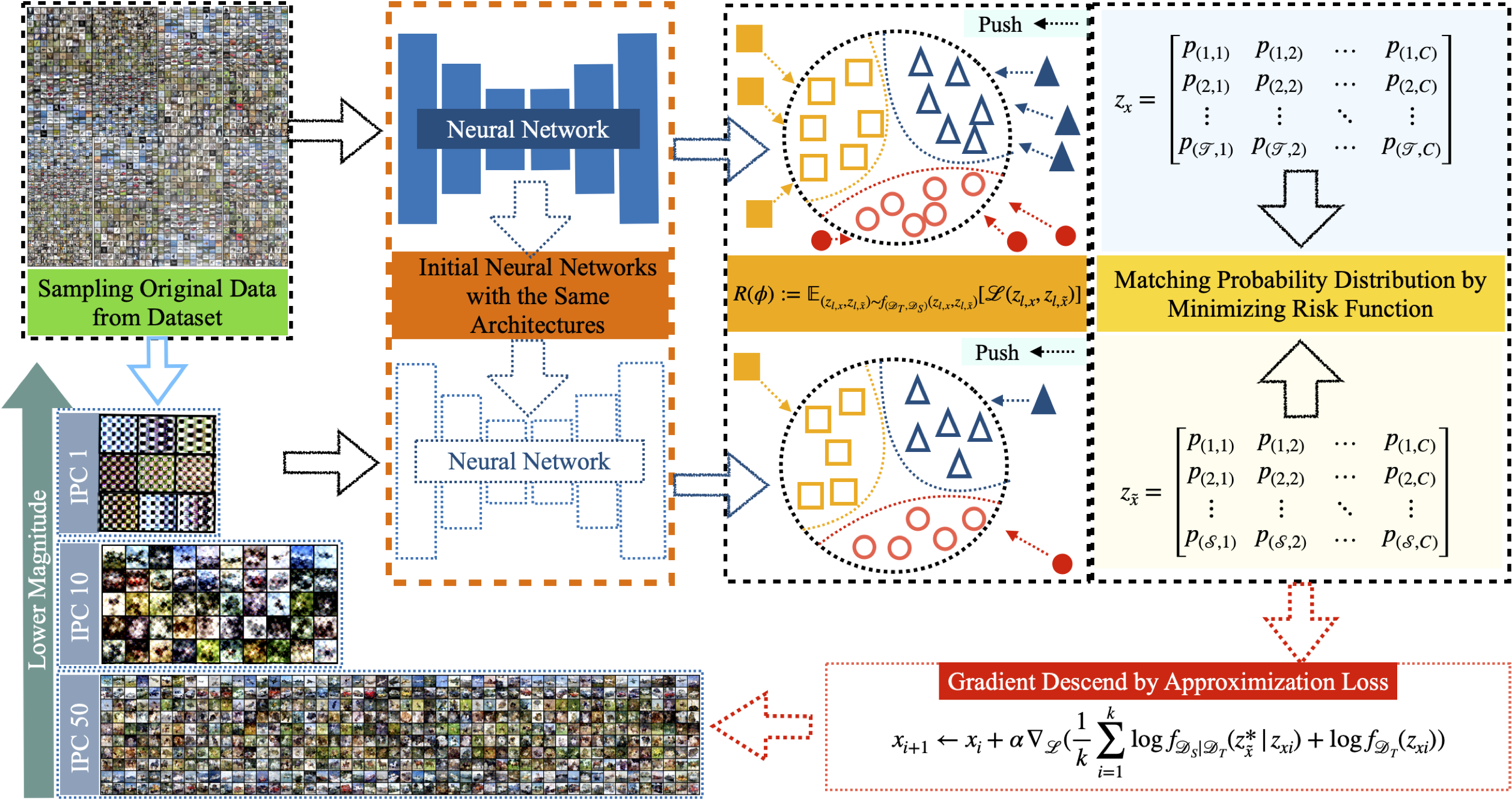

BACON: Bayesian Optimal Condensation Framework for Dataset Distillation

Zheng Zhou, Hongbo Zhao, Guangliang Cheng, Xiangtai Li, Shuchang Lyu, Wenquan Feng, Qi Zhao

Under review. 2024

This work presents BACON, the first Bayesian framework for Dataset Distillation, offering strong theoretical support to improve performance.

BACON: Bayesian Optimal Condensation Framework for Dataset Distillation

Zheng Zhou, Hongbo Zhao, Guangliang Cheng, Xiangtai Li, Shuchang Lyu, Wenquan Feng, Qi Zhao

Under review. 2024

This work presents BACON, the first Bayesian framework for Dataset Distillation, offering strong theoretical support to improve performance.